Project Astra by Google DeepMind: A Glimpse into the Future of AI Agents

- Kalyan Bhattacharjee

- Aug 24, 2025

- 3 min read

Updated: Oct 23, 2025

Introduction

In May 2024, at Google I/O, DeepMind unveiled Project Astra, a next-generation AI agent that pushes the boundaries of how machines understand and interact with the world. This isn’t just another chatbot - Astra is designed to see, listen, analyze, and respond in real time, using video, voice, and memory to function like a real-time smart assistant.

Think of it as Google’s vision for an AI-powered future where machines can observe, understand context, and proactively assist in everyday tasks. Let’s dive deep into what Project Astra is, what it can do, and why it’s one of the most exciting developments in the world of artificial intelligence.

What Is Project Astra?

Project Astra is DeepMind’s experimental AI agent that combines multimodal understanding (visual, audio, and textual) with real-time processing capabilities. It’s built to:

Perceive its environment via a smartphone or camera-equipped glasses.

Understand voice commands and spoken questions.

Respond instantly using natural language and contextual awareness.

Remember past interactions and details across time.

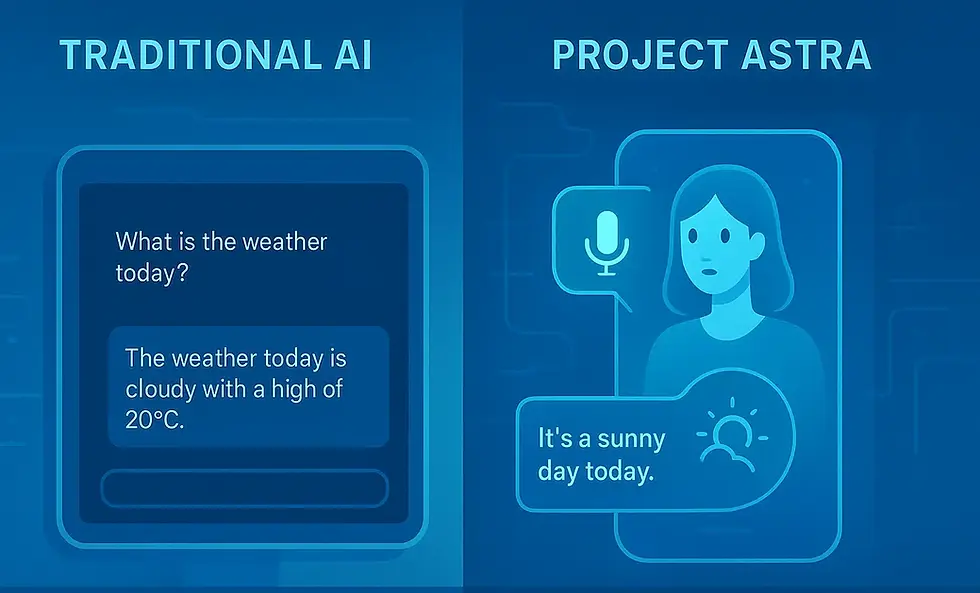

Essentially, Astra is an attempt to move beyond static chat interfaces like ChatGPT and bring AI into the real-world, moment-to-moment experience of users - always watching, always listening, and always helping.

Core Capabilities of Project Astra

Here’s what makes Astra a significant leap forward:

Live Visual Processing

Astra can "see" through a smartphone or wearable device and identify objects, text, and environments in real time. For example, you can point your phone’s camera at a circuit board and ask, “What does this part do?” and Astra will explain it instantly.

Contextual Voice Assistance

Unlike traditional assistants, Astra maintains context across time. If you ask it to “Find my glasses,” it can recall where it last saw them using past visual inputs.

Conversational Memory

Astra can remember and learn from previous conversations, making its responses more intelligent and context-aware. It marks a significant leap toward AI that truly acts as a helpful companion.

Multimodal AI

It’s not just about seeing and hearing - Astra understands text, speech, images, objects, and surroundings all at once and combines them to provide a unified response. This allows Astra to interact more naturally with users, interpreting real-world scenarios the way humans do - by connecting context, visuals, and conversation seamlessly.

How It Works: The Tech Behind Project Astra

Project Astra is powered by DeepMind’s latest Gemini AI models, which are designed for multimodal, real-time performance. The agent runs on devices like smartphones and smart glasses, processing:

Visual input through cameras

Audio input through microphones

Real-time inference using lightweight on-device AI

Cloud-based enhancements for more complex queries

This ensures low latency and high responsiveness, which is crucial for making Astra feel “alive” and helpful in the moment.

Use Cases: Where Astra Could Make a Difference

Project Astra paves the way for integrating AI into our everyday routines. Here are a few scenarios where it could excel:

Education & Learning: Instantly explain objects, diagrams, or concepts on the go.

Home Assistance: Identify lost items, monitor home devices, or help with tasks like cooking.

Technical Support: Provide real-time assistance with electronics, software, or tools.

Accessibility: Assist visually impaired users by describing surroundings.

Content Creation: Use voice and visuals to script, explain, or generate content ideas.

Astra vs Other AI Assistants

Feature | Google Astra | ChatGPT (GPT-4) | Apple Siri |

Multimodal Input | ✅ Video, Audio, Text | ✅ Images, Text | ❌ Only Voice |

Real-Time Processing | ✅ Low-latency | ⚠️ Cloud-based delay | ✅ Fast but limited |

Memory & Recall | ✅ Contextual Memory | ✅ (chat-based) | ❌ No memory |

Visual Understanding | ✅ Yes | ✅ (images only) | ❌ None |

Device Integration | ✅ Glasses, Mobile | ✅ Web, Apps | ✅ iOS only |

Availability: When Will You Get Astra?

As of now, Project Astra is still in development. However, some of its capabilities are expected to roll out later this year via Gemini integration in Google products like:

Google Assistant

Pixel phones

Android smart glasses (possibly)

Google Search enhancements

Closing Notes

Project Astra is more than just a new AI assistant - it’s the first major step toward truly intelligent, real-time agents that blend seamlessly into our environment. With its ability to see, hear, remember, and respond proactively, Astra represents a future where AI becomes a real companion helpful, aware, and always one step ahead.

Key Takeaways

Project Astra = AI with Eyes, Ears, and Memory

Combines real-time visual + voice + contextual understanding

Could redefine personal assistants and everyday computing

Built by Google DeepMind, powered by Gemini models

Currently in experimental phase, rollout expected soon

Related Keywords: project astra, project astra google, google project astra, astra project, astra projection, what is project astra, how to use project astra, what is google project astra, when will project astra be released, how to access project astr, google mariner, gemini2, gemini-2.0-flash-exp, gemini flash 2, google deepmind ai, real-time ai assistant, future of ai, multimodal ai, artificial intelligence, fintech shield

Comments